Wow64 X86 Emulator

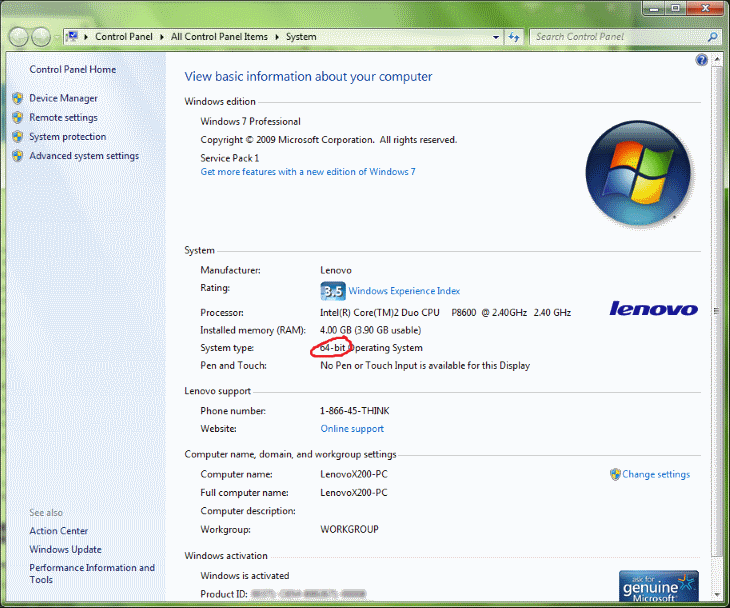

How do you activate wow64 emulator to run a 32-bit application? I don't know what version of Vista is currently being run by the person asking the actual question. WoW64 x86 emulator. While as the name implies, native 64-bit takes advantage of the native 64-bit computing platform, Windows-On-Windows environment, WoW, WOW64 etc. Are all names that refer to an x86 emulator that allows 32-bit Windows-based applications to run on 64-bit Windows.

Adding to andriew's comment, x86's more strict memory model reduces the hardware's flexibility and enforces a more strict ordering than usually required. If you think you really need the x86 memory model, you're probably doing something wrong, but can get it (at significant cost) by adding lots of memory fences. The Alpha was also interesting in that it tried extremely hard to avoid saddling itself or future versions with legacy baggage.

Unlike x86, ARM, MIPS, POWER, PA-RISC, SuperH, etc., Alpha was designed without a legacy support 32-bit addressing mode. Of course, if you link against a malloc implementation that stays below the 4 GB boundary, you can use JVM-like pointer compression. (If you need to support heap objects with pointers to the stack, you'll of course also need your stack allocated below the 4 GB boundary.) A lot of pointer-heavy code, however, can be rewritten to use 32-bit array indexes instead. They tried to get away without single-byte load or store instructions (many string manipulations don't really act on single bytes), though they were added in a later revision. A friend hired to write compilers for DEC shortly before the Compaq buyout told me that hardware engineers had to show simulated benchmark improvements when arguing for new instructions.

They pushed back hard to keep valuable instruction space from becoming a junkyard of legacy instructions. As mentioned, they made as few memory guarantees as practical and forced applications to use memory fence instructions to make their needs explicit to the processor. This left them more flexibility in implementing later models.

The firmware (PAL Code) was almost a hypervisor/nanokernel, with the OS kernel making calls to the PAL Code. The PAL Code version used for Tru64 UNIX/Linux implemented just two protection rings, while the PAL Code version used with OpenVMS emulated more protection rings. As you remember, as long as access violations trap to the most privileged ring, you can emulate an arbitrary number of rings between the least and most privileged rings. Adding to andriew's comment, x86's more strict memory model reduces the hardware's flexibility and enforces a more strict ordering than usually required. If you think you really need the x86 memory model, you're probably doing something wrong, but can get it (at significant cost) by adding lots of memory fences. I think it's perfectly sensible to be weaker than x86. But having to deal with data dependency as you have to do on alpha is just too cumbersome / hard to get right.

Acquire/Release (or read/write/full) memory barriers are quite easy to understand, but data dependency barriers are damned finnicky, and missing ones are really hard to debug. I'm more than a bit doubtful that the cost of a halfway sane (even if weak) coherency model is a relevant blocker in upping the scale of both CPUs core counts and applications. At the moment the biggest issue seems to be the ability of developers to write scalable software, and to come up with patterns that make write writing scalable software more realistic.

I suspect there's room for selectively reducing the coherency effects of individual instructions in some memory models - e.g. X86 atomic instructions that don't essentially imply a full memory barrier (disregarding uncached access and such) would be great. Selectively lowering the coherency makes it possible to do so in the hottest codepaths. 'Wine Is Not an Emulator' - WINE 'WINdows Emulator' - WINE I've seen both interpretations be used.

In this case, 'minimal hypervisor and address space translation layer' (more or less - I've no idea exactly what it does) is somewhat harder to remember. I think they were going for 'simplest/shortest word that gets the point across' since that document is likely to be read by people leaning more toward executive/high-level positions as opposed to the detail-jugglers who shout at bare metal all day. Address space translation is not a small thing to do in the win32 API. Not only do you need to translate pointer parameters and return values for thousands of API function calls, you also need to translate pointers in (possibly nested) structures, and also to do the same for each and every possible window message, where the general message payload may be any one of a combination of integers, pointers, and structures of (structures of) pointers. The pointers may be data pointers where the pointed-to object may be data such as a string that needs to be readable and writable from either side of the address space, or functions that can be called from either side. See for example this effort at writing a win16 thunking layer in c#.

You can do this in the 99% case on Linux using qemu-user, but I'm not aware of an equivalent for Windows (and booting an entire operating system in an emulator is a poor experience and will be even slower). I imagine this Windows variant works like qemu-user and Rosetta. QEMU has some bad performance penalties, I think partially related to the generic JIT (TCG), extremely strict floating point, and full host memory isolation. ExaGear is significantly closer to native performance but I think only supports emulating 32-bit x86. This is a trade-off with any emulation really.

The more hardware accurate your emulation gets, the more you have to keep track of in virtual registers and state, and all that extra tracking adds up in a hurry. Native virtualization dodges a lot of that performance penalty by using the hardware features directly where possible. But that's only really feasible on systems that have nearly identical hardware; x86 is pretty standardized at this point and virtualizes quite well.

ARM is much more varied and harder to virtualize, even on other ARM platforms due to differing feature sets. If your experience of ARM emulation of x86 is qemu, especially the full-system variant, you're getting something much slower than emulation needs to be: - Anything floating point or SIMD is generally done with a big mass of C helper functions rather than fully inline, and it's always unrolled rather than translated into native SIMD. You can blame qemu's choice to use an architecture neutral IR, but even with that design it could be a lot smarter than it is. In any case, a JIT designed for a specific guest/native architecture combo should be able to produce much more efficient instruction sequences. In system mode, qemu has to emulate page tables, which it does by translating every load/store to call a stub that maps the given virtual address to a 'physical' one before loading.

This is quite slow. User-mode qemu (where qemu is a host-arch Linux process pretending to be a single target-arch Linux process) is faster because it doesn't need to perform any translation itself (the host page tables provided by the native kernel do the job). Here too qemu could be improved - it's possible to use host page tables for full system emulation, though in some cases at the expense of hardware accuracy - but AFAIK Microsoft's emulator is user-mode, so this too isn't an issue. Those are only the big issues; I think there are a lot of small cases where there's room to do better than qemu, either in general or by optimizing for a specific architecture pair. Also, I'm not sure how much this matters, but translating x86-ARM64 has some small builtin advantages over other pairs due to the nature of the ISAs. X86 has few general-purpose registers, while ARM64 has 32, so you can map each x86 register to an ARM register throughout the emulation; going the other way around you need to be constantly shuffling registers to and from memory.

And a small one: ret on x86 requires the use of the stack pointer and memory, while ARM64 ret just takes an arbitrary register argument. (In both cases, the visible semantics are the same as a regular indirect jump, but the instructions are optimized to quickly return to the corresponding call instruction using a shadow stack in hardware.) All indirect jumps, including returns, have some emulation overhead because you need to translate the host code address to a native one; it's a bit easier when the host instructions are more flexible. Or an overabundance of I/O. A friend showed me the industry-standard database app used to manage most Internet-connected second-hand bookshops (I think).

Its development decisions went something like: 'Hey, let's be sooooo awesome and update the search results live, as you type! Wow, without even a one-second delay this is SO REALTIME - like we're in 2187' (later) 'Do we need to index the database?eh, nah. I don't think our custom database solution even supports indexing. In my friend's case the store he's at has tens of thousands of books.

Cue perpetual SSD replacements by all the shops using this software My approach to using it (when my friend and I were discussing it while I visited) was to hit Win+R to obtain a text box, type my search string, then ^C/ESC/^V it over. The database app would lock up for about 1.2 seconds per keypress. Back then, 'faster' really meant faster. These days, there's far less of a gap between processor generations for a consumer use-case.

Running Firefox on Windows 10 to check Gmail and Facebook and occasional Word usage probably wouldn't feel much slower if you're on an Apple A10 processor vs a Skylake core i5. We long ago reached the point where an iPad processor was fast enough for consumer usage, and I knew plenty of college students who were happy with their Surface (non-pro, ARM-based). For you and I, we want the fastest machines possible, and right now it's x86. For everyone else, I doubt they've even given it a thought, and I don't think they'd notice. My question is how would this affect the market?

Apple will stay Apple. I don't think they'll go anywhere. The question is Google.

If this happened in 2008, I don't think Android would have taken off anywhere close to the way it did. One one hand, Android has millions of apps already on the market. On the other hand, Microsoft now has potentially millions of old, existing, applications.

I don't think it will make a dent in the phone market. It's too commonly used as a hand-held rather than a station, and windows apps are useless there.

Wow64 X86 Emulator

On the other hand, it can tank the Android tablet market. I agree on the phone side. How many of those millions of apps are usable from a phone screen, using a phone interface? This sounds like cool technology, but this particular use case sounds very limited use on phones.

However, on the tablet side, it may allow Microsoft to bring down the price of the Surface a bit while still maintaining legacy app compatibility. My understanding is that Intel caught up with ARM a couple years ago on performance-per-Watt, but how's the idle power consumption of 64-bit Atom processors these days compared to 64-bit ARM offerings? For many consumer use cases, idle power consumption has a bigger impact on battery life than does performance-per-Watt.

I'm a bit sad this news came out after SoftBank bought up my ARM shares, but I'm glad to see more evidence we may yet get the x86 monkey off our back. Thus far the approach (dating back to Windows 8) seems to be offloading that need to the Store (Cloud) backend and/or other Installer delivering the right bits instead of delivering everything. This is clearest in.NET applications and the.NET Native stack on Windows that most of the work in.NET Native builds to produce platform binaries actually happen on Store servers, but even Windows 8's Store would encourage uploading.NET IL and in 8 the Store app client-side would do the final AOT compile to ARM/x86 (with that getting offloaded to Store servers in 8.1).

Top Pages

- Barefoot Counselling Pdf

- Download Face On Body V2 0 Crack

- Mark Dyczkowski - 2000 - Kubjika, Kali, Tripura, And Trika

- Blackberry Backup Extractor Full Version Crack Free Download

- Winning Eleven 2013 Psx Iso

- Aladin 2g Software Mac

- Spa Mania Full Version

- Download Gear 4 5 Serial Crack Software

- Lg Unlock Calculator

- Best Moborobo Apk 2017 - And Software

- Operation Wolf Remake

- Crisis Core Walkthrough Pdf

- Da 40 Tdi Fsx

- Rbrowserlite Mac

- Kozacy Europejskie Boje Patch Win 7

- Data Becker Rechnungsdruckerei 2012 Crack

- Satyagraha Mp3 Songs 320kbps

- Serious Sam The Second Encounter 1.07 Patch

- Java Servlet Programming Ebook

- La Sera Sees The Light Rar